Maarten Beelen on What Underpins Precision

Responsible for system integration and software management, and one of the co-founders of Preceyes

How did you get involved with Preceyes?

In 2011, when we decided to move from a research project to making this robotic innovation commercially viable.

Why is now the right time for the first robot-assisted eye surgery in a human?

We now have the technology to make a device that is precise enough to meet the requirements of eye surgery, and to apply this precision to surgery in a way that will potentially improve patient outcomes.

What has the feedback from retinal surgeons been so far?

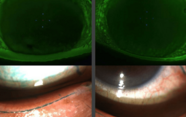

The surgeons we’ve spoken to are all very enthusiastic, especially about the increased level of control and steadiness – their hand movements are scaled down, tremor is filtered out, and we improve precision by a factor of 10 to 20.

What can this robotic-assisted device do to extend what a surgeon is capable of doing today?

It really extends his or her capabilities in tissue manipulation. This robot doesn’t “think,” and it doesn’t make surgical decisions, it simply assists the surgeon.

Robots have software, which bring their own potential risks – how do you squash bugs and maximize safety?

We start with extensive and thorough risk analyses of all things that can go wrong, and make counter measures with redundancy where required. Then, we implement and test the software.

How long until this technology goes mainstream?

Ophthalmic surgery and robotics finally met today – so now, this technology is state of the art. To expand this project, and to enter the market, we’ll need a few more years and surgeons willing to adopt and work on developing this technology – and forward-thinking investors.

Did the procedure go as expected?

We were very happy with the results today. Everything went as expected: the system was fully operational, and the surgeon was able to manipulate the tissue using the robot without any difficulty.

What kind of operating system runs on the robot and the human interface device?

You won’t be familiar with it – it’s not Linux or Windows! It’s a dedicated operating system for real-time control, ensuring the robot can receive a command every millisecond.

What about software updates? Is the robot internet connected?

Right now we have a software freeze, and when we bring the product to the market, the software will remain frozen, which means that a user cannot modify it on their own. We only want fully tested software to be used. The robot is currently not connected to the internet but this is something we are considering in the future. This would allow us to upload fully tested software improvements that have gone through a rigorous risk analysis.

Every procedure that you perform with the robot gives you more information – how will you use it?

We see a lot of areas in surgery really reaping the benefits of big data. With this system we will record every movement of the instrument, and this will be a great benefit for postsurgical evaluation, and will allow us to compare different methods for surgical tasks. It can also be used to train surgeons and may help warn surgeons if what they are doing could potentially lead to a complication.

How do you build a user interface for a surgical robot?

The best user interface is no user interface, so we don’t use one during surgery. During surgery, the surgeon should be looking through the microscope and concentrating. For now we use a touchscreen, but we are working on user interfaces that will meet this prime directive!

If surgeons ask for different functions or options for the robot, how do you implement them?

We gather a lot of surgeon feedback, and then we choose which feedback we think will really bring clinical benefits for the patient. That’s our first filter, and then we prioritize and select the features we want to bring into our system.

Can one improvise during surgery with a robot?

Sure! This first release of the system just follows the hand movements of the surgeon. It has no decision-making or cognitive abilities, and it has no sensors to measure where the eye is. The surgeon is responsible for all movements – we’re just extending the possibilities in terms of precision. In the future, we will be adding sensors for automation of certain tasks, and then the robot can really act as a “second eye” for the surgeon.

And your prediction?

Simple. This will revolutionize eye surgery.

I spent seven years as a medical writer, writing primary and review manuscripts, congress presentations and marketing materials for numerous – and mostly German – pharmaceutical companies. Prior to my adventures in medical communications, I was a Wellcome Trust PhD student at the University of Edinburgh.