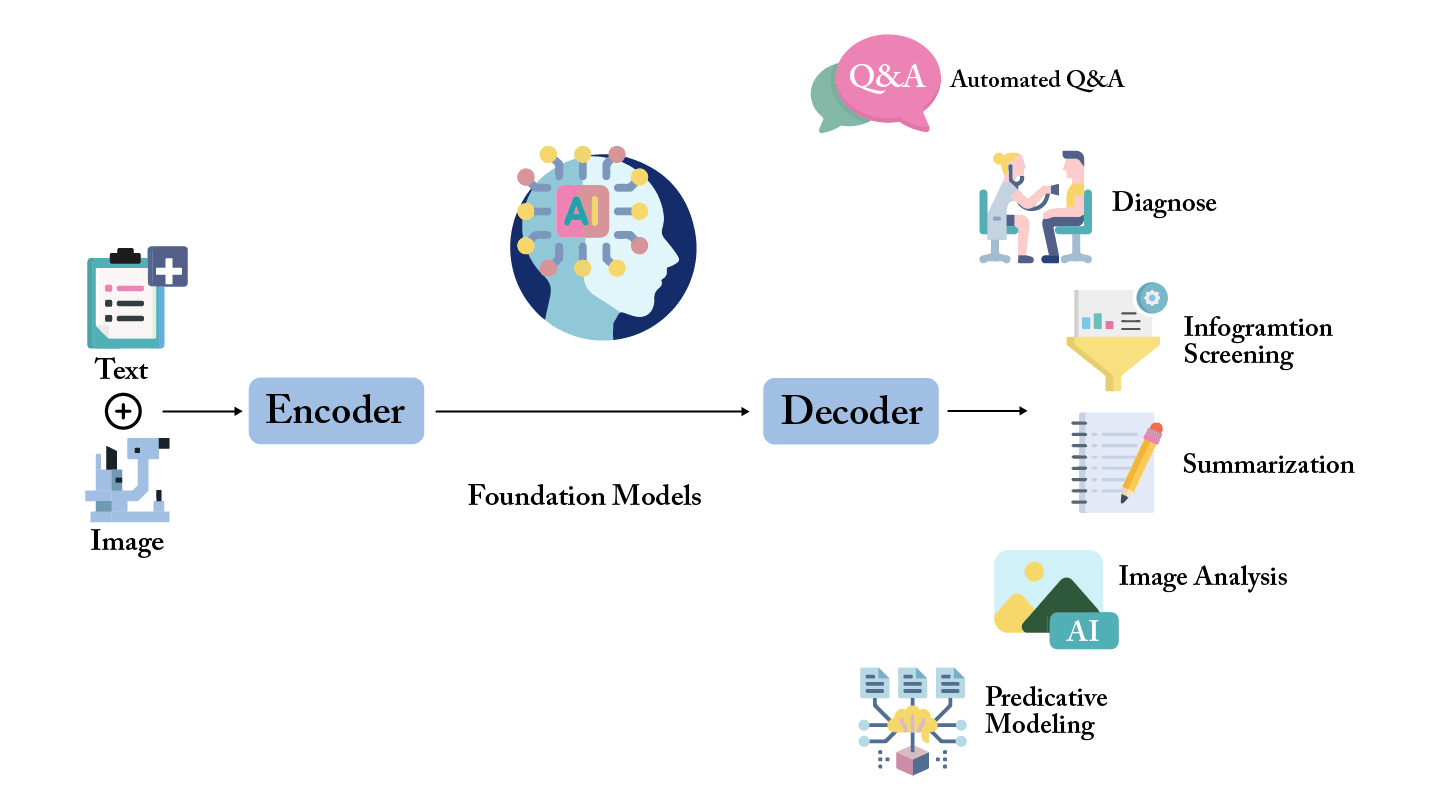

Why foundation models matter in ophthalmology

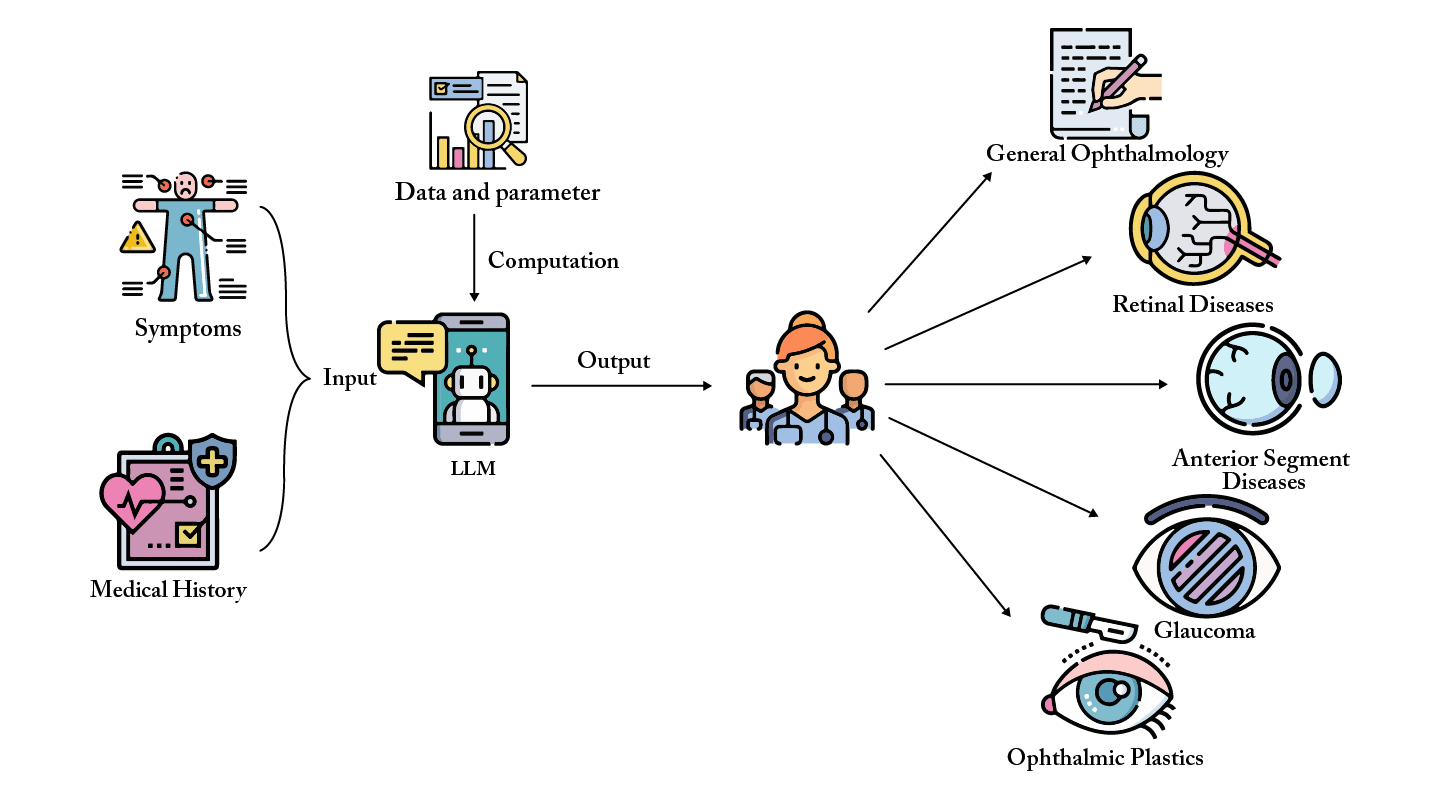

The rise of LLMs in eye care

History has shown that AI tools succeed only when embedded in a supportive ecosystem. For LLMs and foundation models in ophthalmology, this means:

The next frontier: AI equity in ophthalmology

Q1. What is the main advantage of foundation models in ophthalmology compared to traditional AI algorithms?

A. They eliminate the need for ophthalmologists entirely

B. They can generalize across multiple tasks and modalities ✅

C. They are only useful for diabetic retinopathy

D. They require no data for training

Q2. How are LLMs being used in ophthalmic clinical workflows?

A. Designing new imaging devices

B. Auditing imaging reports and generating structured outputs ✅

C. Performing surgical procedures

D. Manufacturing intraocular lenses

Q3. What is the major concern with current ophthalmic AI datasets?

A. They contain no OCT scans

B. They are mostly from high-income countries, risking bias ✅

C. They are too small to be useful

D. They are entirely synthetic

Q4. What is identified as the next frontier for AI in ophthalmology?

A. Improving OCT resolution

B. Expanding cataract surgery access

C. Achieving AI equity and global fairness ✅

D. Reducing hospital staff training

Q5. Why is a supportive ecosystem necessary for deploying LLMs and foundation models?

A. To reduce internet costs

B. To ensure multidisciplinary collaboration and safety ✅

C. To replace ophthalmologists entirely

D. To avoid using clinical data

References

- K Jin, T Yu, A Grzybowski, “Multimodal artificial intelligence in ophthalmology: Applications, challenges, and future directions,” Surv Ophthalmol, S0039-6257(25)00120-1 (2025).

- K Jin, A Grzybowski, “Advancements in artificial intelligence for the diagnosis and management of anterior segment diseases,” Curr Opin Ophthalmol, 4:335 (2025).

- D Shi et al., “A multimodal visual–language foundation model for computational ophthalmology,” NPJ Digit Med, 8:381 (2025).

- YC Tham et al. (Global RETFound Consortium), “Building the world’s first truly global medical foundation model,” Nat Med, 31:1452 (2025).

- A Grzybowski, K Jin, H Wu, “Challenges of artificial intelligence in medicine and dermatology,” Clin Dermatol, 42:47 (2024).

- Z Su et al., “Assessment of large language models in cataract care information provision: A quantitative comparison,” Ophthalmol Ther, 13:1321 (2024).

- D Kang et al., “Evaluating the efficacy of large language models in guiding treatment decisions for pediatric myopia: An observational study,” Ophthalmol Ther, 14:705 (2025).

- Y Wu et al., “An eyecare foundation model for clinical assistance: a randomized controlled trial,” Nat Med, 31:1675 (2025).

- Y Zhou et al., “A foundation model for generalizable disease detection from retinal images,” Nature, 622:156 (2023).

- M Wang et al., “Enhancing diagnostic accuracy in rare and common fundus diseases with a knowledge-rich vision-language model,” Nat Commun, 16:5528 (2025).