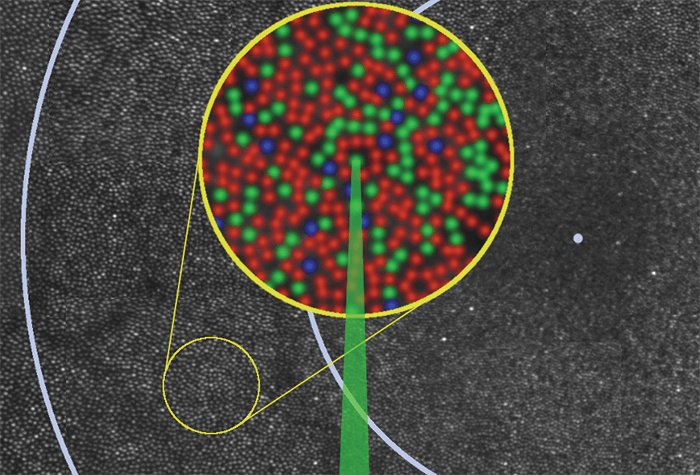

Using a combination of adaptive optics and high-speed retinal tracking technologies, a group of researchers from the University of California, Berkeley, and the University of Washington, Seattle, have, for the first time, been able to target and stimulate individual cone photoreceptor cells in a living human retina (1). The team were able to stimulate individual long (L), middle (M) and short (S) wavelength-sensitive cones with short flashes of cone-sized spots of light (Figure 1) in two male volunteers, who then reported what they saw. Two distinct cone populations were revealed: a numerous population linked to achromatic percepts and a smaller population linked to chromatic percepts. Their findings indicate that separate neural pathways exist for achromatic and chromatic perceptions, challenging current models on how color is perceived. Ramkumar Sabesan and Brian Schmidt, joint first authors of the paper, share their thoughts.

Figure 1. Montage of the human retina illustrating study design. Each spot is a single photoreceptor, and each ring indicates one degree of visual angle (~300 µm) from the fovea (represented by a blue dot). The inset is an enlarged pseudo-colored image of the area where individual cones (L [red], M [green] and S [blue]) were stimulated with green light. Inset size 100 µm. Credit: Ramkumar Sabesan, Brian Schmidt, William Tuten and Austin Roorda

References

- R Sabesan et al., “The elementary representation of spatial and color vision in the human retina”, Science Advances, 2, e1600797 (2016). PMID: 27652339.