- Virtual reality headsets and wearable head-up displays are still emerging as a useful technology

- Google Glass solves or sidesteps many problems although it lacks an immersive experience

- Glass is likely to be safe and comfortable, if used in short bursts

- It may be a short-lived phenomenon: contact lenses that contain a display are under development

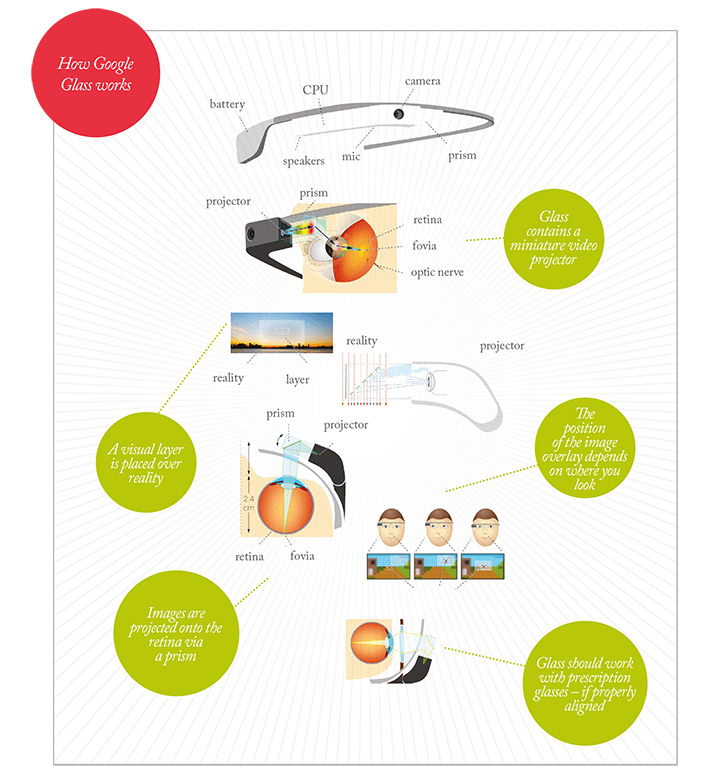

Google Glass is a wearable computer with an optical head-mounted display. The central concept is the combination of a tiny projector and a prism that projects images directly onto the retina (see infographic, next page). Worn like spectacles and operated by voice commands, it takes pictures, records videos, sends messages, gets directions, translates text, searches Google and provides live information on, for example, on flight times and gates.

Glass is the most discussed device of its kind but is certainly not the first. Back in the 1980s the EyeTap (Figure 1) was developed by inventor Steve Mann. It used a camera mounted in front of a display in front of the eye so that computer-generated images could be superimposed onto the original scene – in essence, a wearable head-up display (HUD). Mann’s device was an early attempt at augmented reality; it was envisaged that useful digital information could be overlaid onto objects in front of the wearer, or building design could be rendered viewable in situ on the site where it would then be built. One issue with the approach taken with EyeTap is that misalignments with the camera, display and pupil rapidly cause disorientation in the wearer, and with it dizziness, headaches and emesis. Such devices require customization before use, and precise alignment when worn, and this clearly limits their utility for widespread consumer use.

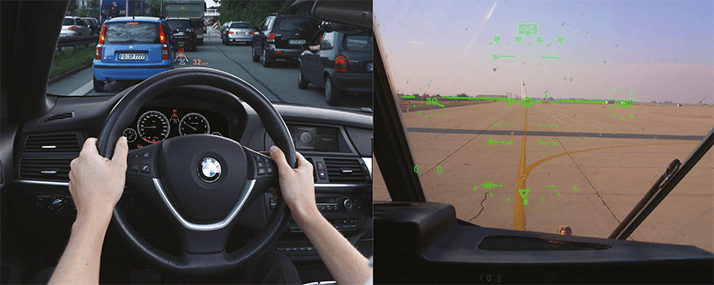

HUDs have been used in aircraft for decades – early optical, reflective versions were demonstrated before World War II– and digital versions are today becoming standard equipment in cars (see Figure 2). A feature of all HUDs is economy of information – partly to avoid superimposing images over things that need to be seen and partly to avoid “cognitive capture”. This is the phenomenon of impaired decision-making; information from the HUD image needs to processed by the brain, and this can distract the user. Cognitive capture involves selective attention, divided attention, and attention switching – and it is exacerbated by increasing the amount of information presented on the display, by advanced wearer age and by high workload conditions.

Google knew all this when it embarked upon “Project Glass” and involved Eli Peli, Professor of Ophthalmology at Harvard Medical School, early on in the device’s development to advise on comfort and safety. The result is that the Glass wearer perceives a small translucent screen hovering at about arm’s length distance, extended up and outward from the right eye. This avoids the need to have a perfect alignment of eye, screen, camera – minimizing the dizziness and headaches – and the need to have a rapidly updating display. Glass neither disrupts binocular vision fully, nor can it do this for a prolonged period – it’s not intended to be used constantly, and the battery only lasts for 1 hour of continuous use. Essentially, if Glass is used as intended, the risks of adverse events are low. It isn’t totally immersive; it can’t highlight objects in the wearer’s full field of view; nor does it try to; it basically acts as just like a mobile phone display. Glass could be developed in future versions to project across the entire field of vision, but then some of the problems side-stepped by Google would have to be tackled head on. It is possible, but others have even more ambitious ideas (see below).

A Medical Aid and Device?

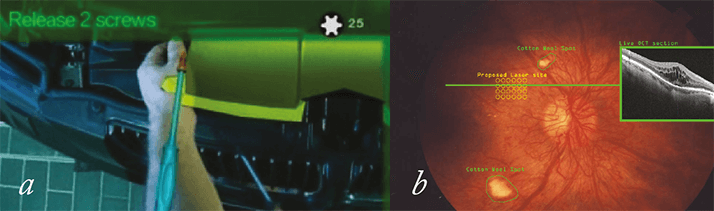

Google Glass is already being used in the clinic. Christopher Kæding, an orthopedic surgeon at the Ohio State University Wexner Medical Center uses Google Glass to livestream his surgery, demonstrating procedures to medical students watching in real time on their laptops, and instantaneously consulting with colleagues elsewhere in the hospital. A similar opportunity clearly exists in ophthalmic surgery. The potential for remote consultation is plain to see, but the educational advantages are even greater. Medical students can observe time-critical procedures simultaneously – rather than one after another. Whatever the surgeon sees through complex instrumentation for use only by experts is now available for viewing by students in the lecture theater. For those performing a procedure for the first time an augmented reality approach might help – instructional images overlaid onto the field of view guiding the surgeon through the procedure could be of real educational utility (see Figure 3).

Pierre Theodore, a cardiothoracic surgeon at the University of California San Francisco Medical Center, has found another use for Google Glass while performing surgery. He compares his patient’s CAT scan images with what was in front of him – a process he likened to driving a car and glancing into the rear-view mirror. An ophthalmologist might use Glass to bring up relevant details, like previous central retinal thickness measurements of a patient with macular edema, or monitor the settings of their instrument, laser or vitrectome settings. These things are not essential, but they are handy. A more mundane application would be to record all interactions between patients and doctors – with consent – making it easy for patients to review the advice given to them by their physicians, and providing a definitive record of all interactions between doctor and patient. In contrast to the potential utility of Google Glass as a medical tool, its value as a device for the treatment of ophthalmic disease appears to be limited. Some diagnostic procedures may be possible, such as an app for best corrected visual acuity (BCVA) eye testing; the patient, wearing their prescription contact lenses, reads the letters that he or she sees, and Glass scores the results and e-mail the patient’s optician or ophthalmologist.

Looking to the future, clinical applications start to appear in what the company Innovega calls a “wearable transparent HUD” – a device that is comprised of a soft contact lens and some nanoscale display technology (see Figure 4). The information displayed could make the most of any residual vision in near-blind patients with retinal disorders, and potentially delay the placement for retinal implants. Indeed, such devices – with sufficient real-time graphics processing power – could have a number of useful applications for patients with impaired vision; perception can be altered, and improved. For example, everyday objects could be recognized and highlighted, amplified or magnified. Real-time correction of certain ocular defects – like post-LASIK surgery glare (Figure 5) – may even become possible. As ever, the technology seems to be 5–10 years away from commercial availability, but the fact that Google Glass is being positioned as a consumer technology, means that if it succeeds, the pace of development will be ferocious. And ophthalmology may benefit because of it.

Videos

- Google Glass used to live-stream surgery:

http://tas.txp.to/0213-glass - Early augmented reality HUD device for patients with tunnel vision:

http://tas.txp.to/0213-harvardHUD - Augmented reality in action, guiding BMW mechanics through a repair process:

http://tas.txp.to/0213-BMW