Personalizing Reality

How data-driven VR/AR is driving new insight into visual impairments

At a Glance

- How can eye-disease affect our sight?

- Current depictions of sight loss in the media can be unrealistic

- Using a bottom-up approach, we’ve developed a VR/AR platform to quantitatively simulate visual impairments, based on clinical data

- Here, I highlight the technology and discuss its potential applications.

Most people have a pretty good idea of what short- or long-sightedness looks like. Many of us experience it on a daily basis, while those with perfect vision can simulate it simply by wearing glasses of the wrong prescription. There is a key gap in public understanding, however, when it comes to posterior eye diseases such as glaucoma and AMD. In those cases, light is focused correctly on the retina, but is not being encoded properly by the brain. It is hard to imagine what effect that has on our vision, and to make matters worse, the depictions you see if you search online are often wildly inaccurate. Indeed, the whole notion of drawing what a specific disease looks like is questionable, given that two patients with the same diagnosis often report very different experiences. How then can we understand what it is like to have a visual impairment? Supported by the NIHR Biomedical Research Centre at Moorfields Eye Hospital NHS Foundation Trust and UCL Institute of Ophthalmology, and by donations from Moorfields Eye Charity, we’ve been developing a new platform to simulate how others see using virtual and augmented reality (VR/AR).

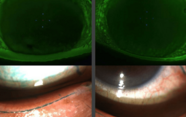

More than a blob

Many existing depictions of vision loss fundamentally involve superimposition – essentially placing a black ‘blob’ on top of the visual scene. The blob might be in the center of the screen in the case of AMD, around the edges if its glaucoma, or there might be lots of little black blobs in the case of diabetic retinopathy. This approach is computationally expedient, but isn’t very realistic. For one thing, posterior visual impairments are not static, but move with your eyes – affecting different parts of the screen depending on where you are currently looking. Secondly, patients overwhelmingly tend to report that they don’t see ‘black blobs’ at all. Instead they tend to report parts of the visual scene becoming blurred or jumbled, or objects simply appearing absent altogether. Indeed, you can experience this yourself: try closing one eye and observing what your blind-spot looks like. Do you see a black blob?

New technologies allow us to tackle both of these challenges. Firstly, we can use the eye- and head-tracking built into the latest VR headsets to make simulations gaze-contingent: localizing impairments on the user’s retina, rather than on the screen. Secondly, we can use modern graphics hardware – of the sort designed primarily for computer gaming or bitcoin mining – to apply advanced image-processing techniques to an image or camera-feed in real time. For instance, with the power contained in a typical smartphone, it is relatively easy to blur, desaturate, discolor or distort an image, or to cut a hole in one area and fill it in with random shapes or textures. Our ultimate goal is to recreate the invisible nature of many impairments: removing information silently, in such a way that you don’t realize anything is missing.

A bottom-up approach

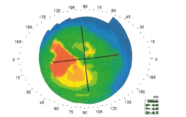

Our other key design philosophy is that we want our simulations to be bottom-up: driven by data rather than disease labels. Thus, rather than starting with a diagnosis, our basic building blocks are the different properties of the visual system, such as the spatial resolution of the eye, its sensitivity to changes in luminance or color, or how straight a uniform grid of lines appears. Each of these aspects of vision can be quantified using the relevant eye-test, and all these bits of data can then be fed into the simulator. By assembling together these basic blocks, we hope to build a unique visual profile for a specific individual, irrespective of their particular diagnosis. Of course, that doesn’t stop us from using big datasets to create the ‘average’ profile for specific diseases. For example, one of the things we did recently was compare the average vision of a newly diagnosed glaucoma patient in the UK versus one in Tanzania. The results were arresting.

It is important to stress that, despite our approach being data-driven, there is still a degree of artistic license in our simulations. For instance, there can be a lot of reasons why you may score badly on an eye-test such as a letter chart: you might be unable to read small letters because they appear blurry, or because they appear distorted, or because you can’t keep your eye sufficiently still. Ultimately, we hope to develop eye tests that can distinguish between these different causes. But for now we have to use our judgment to predict which of these causes is most likely, and it will be interesting to see whether patients agree with some of the decisions we’ve made.

Using the simulator…

In education

Simulations can leave an immediate and indelible impression on users. We’re visual creatures; around a third of our brain is dedicated to processing visual information – it’s why visual impairment can be so debilitating. So although we can read a description of something, seeing it often has much greater impact. Our VR/AR headset delivers a huge amount of information in just a few seconds, and very quickly gives people a sense of how a condition might affect everyday life in a way that is not always possible from reading a textbook or viewing a static image. Even having created them, some simulations have surprised even me; seeing the VR experience of nystagmus for example was really shocking. While at public events, people are often surprised to see how little some conditions such as color blindness actually affect one’s ability to perform day-to-day tasks. Consequently, we’re looking into the impact of the simulator as a teaching and empathy aid, and we have an ongoing trial with City University School of Optometry (London, UK) to assess whether the simulator improves understanding and empathy amongst new optometry students compared with reading the textbook alone. We want to see in particular whether the simulator makes new students better at predicting what challenges a patient will face.

We are also exploring the use of the simulator as an educational tool for the public. Generally, people aren’t good at recognizing the signs of visual problems – for instance, diagnosis rates for glaucoma are alarmingly poor (some people think as low as 50 percent). Accurate simulators could raise awareness of how vision may be affected in the initial stages of disease, helping patients recognize potential issues earlier, and when they can be more effectively treated. Further, showing how a patient’s visual impairment is likely to progress might help encourage patients to comply with their treatment regimen. We have been particularly inspired in this respect by Peek Vision, who do a lot of work in developing countries, and who found that providing parents with a printed depiction of their child’s vision made them more likely to attend future appointments.

In research

Sight loss simulators can also be a powerful tool for research. Being able to explicitly control and manipulate exactly what the user sees gives us a unique opportunity to reexamine long-standing scientific questions from a fresh perspective.

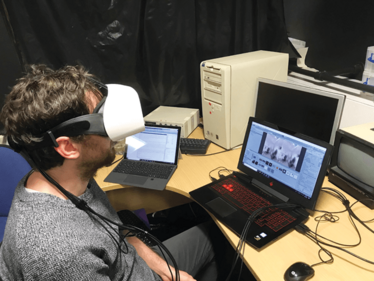

For example, a lot of clinicians have noticed that the eye tests we use most often (letter charts, perimetry, and so forth), tend to be relatively poor at predicting a patient’s quality of life. So you can have two people with the same test score, but each reporting very different levels of impairment. Is this because the tests are not capturing all the key information? Or are some people better at coping with sight loss? Simulators allow us to systematically tease apart these two hypotheses. For instance, in one study we are currently running, we are using AR to give different people the exact same impairment. We are then asking them to perform a range of tasks, including various different eye-tests, as well as the sorts of everyday tasks that patients often find challenging, such as finding their mobile phone or making a cup of tea. We can then observe directly whether some eye-tests are better than traditional measures at predicting performance on everyday tasks. Furthermore, by analyzing the eye, head- and body-tracking data from the headset, we can examine why it is that some people are better able to cope with their impairment. Maybe in the future we might even be able to teach everybody the coping strategies that our best-performing participants identify.

As well as giving different people the same impairment, we can also do the opposite experiment – giving the exact same person a range of different impairments. In this way, we can study how different patterns of sight loss affect everyday life, while controlling for all other factors, such as age or physical fitness. This will ultimately allow us to develop better ways of quantifying an individual’s expected level of impairment, which is vital when assessing how effective a new therapy is, or when deciding how to prioritize patients. In the long term, we hope that such experiments with ‘simulated patients’ may help us devise better ways of predicting an individual’s needs, allowing us to preempt difficulties before they arise.

In accessibility

As society ages and rates of visual impairment increase, there is an ever greater need to develop environments and products that are accessible to everyone. I envisage that simulators such as ours will become a vital tool for engineers and architects: allowing them to see for themselves whether or not a space is usable, and how it can be made more welcoming for people with sight loss. Is this train station easy to navigate? Is this website readable for people with low vision? By using AR or VR, you can simply look and see. Perhaps the most exciting aspect of this approach – and the reason why I think it will really take-off – is that the problems identified are often easily addressed. For example, it might just require using a thicker marker pen to write on a whiteboard, or changing the color of the lighting around a particular staircase. These are small changes, but they can make a big difference to people’s lives, and are solutions that often aren’t apparent until you see the world through someone else’s eyes.

The VR/AR simulator

- The user wears a VR/AR headset to look at either pre-recorded videos, VR environments, or the real-world via front-facing cameras (‘Augmented Reality’). The images are then filtered in real-time through a series of digital processing effects designed to mimic various aspects of visual impairments. The digital filters are based on clinical test data, such as perimetry or letter-acuity.

- Images can be delivered independently to each eye, and eye tracking allows impairments to be displayed relative to the user’s gaze (i.e., localized on the retina).

- A video of the simulator in action can be viewed HERE.

Looking ahead

It is clear that VR and AR will have a huge impact on eyecare in the future. If eye tests aren’t being performed at home using VR/AR headsets in the next 5–10 years, I will be very surprised – and disappointed really, as we already have a lot of the necessary technology. As for our simulator, we don’t yet know what the most useful application is going to be, and we’re still at the stage of exploring to see what works and what doesn’t.

To a large extent, the future of sight-loss simulations will also depend on how the technology evolves. In terms of software, the signs are already extremely encouraging; a lot of the historical hurdles are tumbling. For example, in the past it was a nightmare trying to adapt code to support different devices. But these days it usually only takes a few clicks to transfer our simulator from one type of VR/AR headset to another, or from an android smartphone to an iPhone; I even got it running on the display of my fridge-freezer display last week, although kneeling down in the kitchen doesn’t make for an ideal viewing experience. In terms of hardware, there’s still some room for improvement though, and I expect we’ll see some big leaps forward in next 3 years. For example, at the moment, the headsets are fairly bulky; in the future I hope to see the same technology built into an ordinary pair of glasses, which will make applications such as our simulator much more accessible. Fortunately, a lot of the big gaming and home-entertainment companies are really pushing the hardware forwards, and we can piggyback on any new development as they become available. And ultimately it is this that makes this such an exciting area to be working in; simulation spectacles have been around a long time – it’s just that, in the past, they’ve involved glasses with black spots glued onto them... Now, because we have all of this amazing technology, we are only really limited by our imagination, and who knows where the future will take us – or what we will discover along the way?

Pete Jones is a post-doctoral research associate in the UCL Child Vision Lab, based both in the UCL Institute of Ophthalmology and Moorfields Eye Hospital, London, UK.

Pete Jones is a post-doctoral research associate in the UCL Child Vision Lab, based both in the UCL Institute of Ophthalmology and Moorfields Eye Hospital, London, UK.