It’s All About Perspective

Consign your surgical microscope to the scrapheap. Digital light field imaging promises the elimination reflections, aberrations, blur and more

At a Glance

- To take a great image, you need your camera to be in the right place under perfect lighting conditions

- These conditions are virtually non-existent with surgical microscopes – and there’s little that can be done about it

- Digital light field imaging takes a completely different approach to light sensing and rendering, and allows refocusing, changes of point of view and the removal of reflections

- This approach promises great benefits in terms of enhanced periprocedural tissue visualization for surgeons, but ultimately, surgical robots too

“You want to make a portrait of your wife. You fit her head in a fixed iron collar to give the required immobility, thus holding the world still for the time being. You point the camera lens at her face; but alas, you make a mistake of a fraction of an inch, and when you take out the portrait it doesn’t represent your wife – it’s her parrot, her watering pot – or worse.” – Le Charivari magazine, 1839.

When it comes to improving ocular imaging, we can learn a lot from the first days of photography. Back then, focusing was the challenge – early lenses had small apertures, a shallow depth of field… and exposure times that were so long, fixed iron collars were deemed necessary to get people to remain still for long enough to take an unblurred photograph. Even now, a blurred photograph (either through motion or a focusing error) evokes a sense of loss – you can’t refocus the photograph after it’s taken; the image is lost forever.

Figure 1. What do we mean by “plenoptic”? It captures the direction, wavelength and intensity of every ray of light captured by the digital image sensor, for each and every timepoint captured.

Let’s look at vitreoretinal surgery. Whether the surgeon’s using a surgical microscope or a 3D (stereo) camera with a head-up display, there’s still a shallow depth of field. There will inevitably be times where tissues you’d like to see in focus… aren’t. Then there’s illumination. When a professional photographer lights a scene or a set, they can adjust where the lights are placed and the camera is positioned to get the best possible image. Although light sources have improved greatly over the last 20 years, that’s not really a luxury surgeons have.

What’s limiting imaging

Digital cameras have made a huge difference to what can be achieved in photography. Manufacturers are able to produce incredibly light-sensitive sensors that measure in the hundreds of megapixels, and the fact that the information is digital means that algorithms can be applied to the image data – both in the camera at the time of acquisition and afterwards (1). The flash, tripod and studio lights haven’t been eliminated from photography, but it’s now possible to take reasonable photographs in near-dark conditions with just a digital camera with a great sensor and a good processing unit. It’s important to realize that today’s digital images are not just directly recorded – they are computed too. Clever algorithms make them sharper, brighter, and help the colors stand out. But they don’t solve the issue that stalks all lenses: aberration. It’s unavoidable – a natural consequence of refraction. Not all light converges on a single point on the sensor, and the bigger the lens, the bigger the problem they become. If only there was a way of correcting for them…

Tailor the hardware, not the algorithm

You might refer to a photograph as a “snapshot.” That’s a great term – it evokes what a photograph is in terms of light: the total sum of light rays striking each point at an image in a single point of time. What a photograph doesn’t do is record the amount of light travelling along the individual rays that make up the image, which as we’ll see soon, can be very useful. An apt analogy is that of an audio recording studio (1): a photograph is a recording of all instruments being played together on a single track; but what works better is the recording of each individual instrument on a separate audio track – it means producers can improve and fix things in the mix.

In terms of digital photography, there is a way of achieving “multitrack” imaging: digital light field photography (DLFP). It works by exploiting the fact that you can produce digital camera photosensors with hundreds of megapixels – yet you don’t really need more than two megapixels for a standard 4" × 6" photograph. You use that spare capacity to sample each individual ray of light that contributes to the final image – the full parametrization of light in space, or the plenoptic function (Figure 1). What’s sampled, therefore, is termed the “light field” – a term borrowed from computer graphics, and just like in a virtual model, you can start to process that information, and generate different outputs – like points of focus.

Figure 2. What is light-field imaging? a. The compound lens of an insect, compared with the microlens array present in a light field camera; b. A specialization of the plenoptic function that preserves the encoding of viewpoints, ray angles and L(φ, θ, Vx,Vy,Vz) → 5D function unlocks the technique; it requires both 2D orientation and a 3D position to work.

To capture all of this information, DLFP requires a microlens array – somewhat like the compound eyes of insects (Figure 2) – to be placed in front of the photosensor, with each microlens covering a small array of photosensor pixels. What the microlens does is separate the incoming light into a tiny image on this array, forming a miniature picture of the incident lighting – sampling the light field inside the camera in a single photographic exposure (1; Figure 3). In other words, the microlens can be thought of as an output image pixel, and a photosensor pixel value can be thought of as one of the many light rays that contribute to that output image pixel. Finally, each microlens has a slightly different and slightly overlapping view to the next one – each has a different perspective, and that can be exploited too. But the secret to unlocking the potential of DLFP (and rendering the final image) lies in raytracing.

Refocusing after the event – and more

Imagine a camera configured in exactly the way you want – and that you could (re)trace the recorded light through that camera’s optics to the imaging plane. Summing those light rays then produces the photograph. Raytracing also gives you the tools to start correcting for the aberration that’s always present with physical lenses – you can start to handle the unwanted non-convergence of rays and get a crisper, more focused image in the final computation, as you’re focusing not through a flawed real lens, but a perfect, imaginary lens instead.

But really, what’s most impressive is the fact that this approach lets you refocus the image over a range of distances after the fact – you can adjust the image sensor plane, post hoc, so the image is focused as desired – or all of the image can be rendered in focus (and if it’s video footage, this can be performed in real-time too). You might be aware of this technology already: it’s what underpins the commercially available Lytro and Raytrix cameras.

But what can be performed with light field imaging doesn’t stop there: it also offers reflection removal. If a reflection affects some, but not all of the image perspectives – it can be computed away. And finally, this approach of taking multiple images from many different angles even offers you high resolution, three-dimensional information.

What this means for ophthalmologists

The applications in ophthalmology are obvious (Figure 4) – let’s work through each feature in turn.

Figure 3. The basic principles that underpin the light-field camera. By placing a microlens array in the focal length, the pixel underneath each microlens, samples position, intensity and the direction of each ray – which means you can get multiple views by selecting different rays under each microlens.

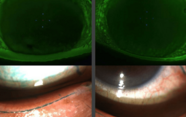

Figure 4. Some examples of everyday issues with surgical microscopes that light field imaging promises to help eliminate.

Aberration removal

It’s always nice to have a better quality image, and the removal of aberration is clearly something that’s nice to have for everyone. Where it might have the greatest impact is in screening – something at the periphery of an image that has started to get distorted, now isn’t. It not only lets those screening images for potential pathologies see them more clearly, but as computer algorithms start screening fundus photographs – like the Google DeepMind/Moorfields Eye Hospital collaboration – they’re more able to reliably and reproducibly flag pathologies too.

Reflection removal

This is a truth across all surgical procedures, but is particularly apparent with eye surgery, and particularly retina surgery: illumination causes problems (Figure 4). Unlike professional photographers in a studio who have the luxury of placing studio lamps in optimal locations, and can block out off-camera reflections with black drapes, surgeons have no such comfort: they have to illuminate as best they can, and try their best to work around the reflections and shadows that occur. Eliminating these issues should result in safer and easier surgery, but it also adds the potential for using lower levels of illumination, which might reduce any potential heat or phototoxicity issues too.

Everything in focus

Nothing in the eye is on a flat plane. Resolution and depth of field are reciprocals of one another, and surgical microscopes are designed to strike a compromise between both – with an excess of image sensor pixels, light field imaging should eliminate the need for that compromise. If you want it to be like that, everything could be sharp and in focus. Motion blur (Figure 4) can also be caught and eliminated and corrected for too; it’s just a matter of computation to direct the directions in motion, and to correct for it.

The third dimension

Bear in mind that light field imaging essentially gives you many views of the tissue that you want to manipulate – and these can be combined into a much richer representation of the tissue. Surgeons can choose to see what’s behind the surgical instruments – or even the tissue that you’re directly manipulating. But in addition to that, these multiple views give you information about the third dimension. This can be useful in many ways.

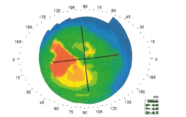

There’s a clear trend towards using head-up displays in retinal surgery – operating at a surgical microscope soon becomes uncomfortable. Today’s head-up displays do a great job of approximating the stereopsis that you experience using surgical microscopes, but light field imaging might be able to let instrument manufacturers synthesize an enhanced 3D view, and this automatically lends itself to augmented reality – the overlay of pertinent images onto the 3D display the surgeon looks at. Feeding such input into virtual reality headsets like the Oculus Rift, however, is feasible – but initial attempts have proven unpopular; it takes surgeons away from reality, and they lose situational awareness. Of course, there are more avenues where light field imaging might come in useful: Three-dimensional measuring of the optic nerve from normal fundus camera setups, corneal topography, or even forms of tomography if the approach also works with absorbing wavelengths…

Robotic eyes

There’s an obvious extrapolation of the use of light-field imaging: guiding robots to perform minimally invasive interventions. The robots get an extremely detailed (in theory, down to 10 µm precision), rich 3D environment in which to operate; more data to help it determine where to ablate, augment, cut, debride or suture.

Light field imaging for medical applications is still in its infancy, though (2–4) – which makes us among the first to explore this topic, especially in ophthalmology, where we build upon our digital autofocusing ophthalmoscopes (5). There is still much to be done to optimize and customize both the hardware and software for medical use in general, and ophthalmic applications in particular. Light field imaging was first conceived in the 1980s, but now we’re well past the point where digital photosensor technology and computer graphics processing power has made this a feasible digital imaging approach: things are progressing rapidly now. I am lucky to have the support of Fight for Sight and the Academy of Medical Sciences, the two foundations that have bootstrapped the project. My clinical collaborator Pearse Keane from Moorfields Eye Hospital, and the PhD student we are co-supervising, Sotiris Nousias, complement the team, and are fundamental assets in bringing these new imaging technologies and algorithms to the clinic.

So if you’re a surgeon, imagine a reflection-free surgical microscope approach that has, in effect, adaptive optics (without the expensive, deformable mirror), where nothing is out of focus, and surgical instruments can be removed from your field of view. Add to that a spectacular three dimensionality and the added feature of a head-up display – without a single iron collar being required. Just imagine…

Christos Bergeles is a Lecturer and Assistant Professor in the Translational Imaging Group in the Centre for Medical Image Computing at University College London’s Department of Medical Physics and Biomedical Engineering.

- R Ng, “Digital light field photography”, PhD Thesis, Stanford University, Stanford, CA (2006). Available at: bit.ly/lytrothesis, accessed November 14, 2016.

- A Shademan et al., “Supervised autonomous robotic soft tissue surgery”, Sci Transl Med, 4, 8 (2016). PMID: 27147588.

- HN Le et al., “3-D endoscopic imaging using plenoptic camera”, Conference on Lasers and Electro-Optics, OSA Technical Digest, paper AW4O.2. Available at: bit.ly/OSATD3D, accessed November 14, 2016.

- ZJ Geng, “Intra-abdominal lightfield 3D endoscope and method of making the same”, United States Patent Application Publication, Pub No: US 2016-0128553 (2016). Available at: bit.ly/zjasongeng, accessed November 14, 2016.

- CB Bergeles et al, “Accessible digital ophthalmoscopy based on liquid-lens technology”, in MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part II (2015).

Christos Bergeles is a Lecturer and Assistant Professor in the Translational Imaging Group in the Centre for Medical Image Computing at University College London’s Department of Medical Physics and Biomedical Engineering.