Forging Iron Man

The future of eye surgery is robotic arms and augmented reality. Will the ophthalmologists of tomorrow be more Iron Man than steady hands?

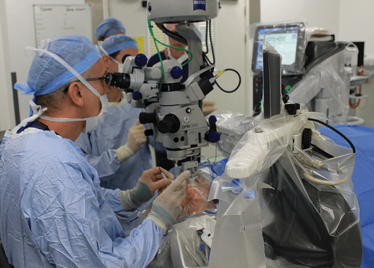

It’s mid-afternoon on the last day of August. The Professor of Ophthalmology at the University of Oxford, Robert MacLaren, looks both happy and relieved: the procedure is over. It was successful, and his patient is being wheeled out of Theatre 7 of the Oxford Eye Hospital. He stands then steps away from the surgical microscope and within seconds, he’s surrounded by a phalanx of people in blue scrubs congratulating him. There’s laughter, handshakes and elation all round – today was a good day at the office. But only a few minutes beforehand nobody was speaking: the room was dimmed; the tension palpable. Why? Robert was in the process of making history. He was the first person in the world to perform robotic-assisted eye surgery (an ILM peel) on a live patient.

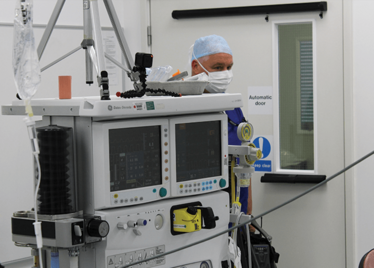

The room was busy – at the end of the procedure I could count 18 people – in addition to the theatre staff and the consultant anesthetist, Robert’s fellow, Thomas Edwards had been there, assisting and observing (he would go on to perform the second ever robot-assisted eye surgery later that day). The media were present – the John Radcliffe Hospital’s own staff, the BBC’s cameraman Martin Roberts, and me. There were the representatives from Preceyes, the company that built the robot: their Medical Director (Marc de Smet), two of their engineers (Maarten Beelen and Thijs Meenink) and their CEO, Perry van Rijsingen. Next to Robert and Tom was Bhim Kala, the sister in charge of the operating theater, and at the foot of the patient, was the consultant anesthetist, Andrew Farmery. To my eyes, they all looked even happier and more relieved than Robert.

Robot-assisted surgeries aren’t new. The first robotic device used was Arthrobot back in 1983, which manipulated patients’ knee joints into the appropriate position for each part of the surgical procedure. Today, there are a number of surgical robots in use – the most famous being Intuitive Surgical’s da Vinci laparoscopic surgical system. What’s interesting is how the design of these robots has evolved over the last 23 years since Arthrobot – and how this mirrors the development of autonomous cars.

To understand this, let me tell you the story of a robot called Sedasys. Johnson & Johnson designed, developed and marketed it, claiming that it could eliminate the need for an anesthetist in the operating room. Any doctor or nurse could operate the device and put a patient under – and it would cost a tenth of the price of getting a human to do it. Indeed, the FDA approved it on that basis. Yet J&J removed the robot from the market in March 2016. Why? Poor sales. There was a lot of resistance to its introduction; anesthetists certainly weren’t happy. The American Society of Anesthesiologists lobbied hard against it, questioning the safety of the device. It didn’t sell, and the product was dropped. The lesson? To get hospitals to trust robots, these advances need to be introduced incrementally. Sedasys might have been the perfect tool for the job, but it made doctors feel obsolete. To succeed at the moment, you have to make them feel like fighter pilots: operating the joystick, in total control of the situation. You can see a parallel evolution with cars: everybody’s a great driver, but… first cruise control, then adaptive cruise control, then lane assist, then park assist. Add in GPS and stereoimaging of the road around, and you now have what Tesla call Autopilot; what Mercedes call Distronic and what Volvo call Driver Assist. But even now, drivers are supposed to pay attention and take control when the car’s computer can’t cope – much like surgeons might step in during a robotic-assisted procedure. But how long until cars are fully autonomous – and drivers submit to becoming passengers in their own vehicles? Will surgeons ever allow procedures to be planned by algorithm and executed by robot?

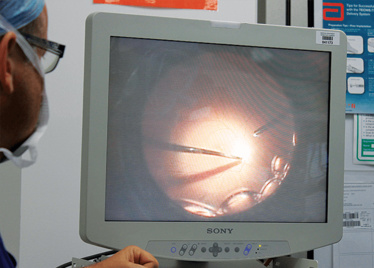

Two themes have become clear: most robots that are used during surgical procedures perform small incisions with high levels of precision – enabling surgeons to be far more minimally invasive than even the nimblest amongst them could achieve by hand. The second theme is improved imaging – much like the march of heads-up displays and intraoperative OCT (iOCT) in vitreoretinal surgery, surgical robots can have integrated cameras, three-dimensional lightfield imaging, and they can even use near-infrared ultraviolet light sources to exploit fluorescent labels, like the da Vinci system’s “firefly mode.” Imaging data can be displayed on a screen and augmented with relevant data from other sources, like CT or MRI scans. Google has even been getting in on the act, with image-processing algorithms that take the input from a video feed and overlay information – like a vasculature or neuron map – onto that image. Might all of this augmented reality make surgeons feel less like fighter pilots and more like Iron Man instead?

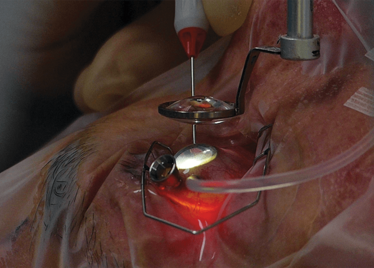

Advanced imaging. Small incisions. High precision. Why haven’t robots been used for eye surgery before now? There are three main reasons: it’s a matter of size, access and what’s precise enough for the periphery isn’t precise enough for the eye. An eye robot needs to be small and at least as maneuverable (and more precise) than a surgeon’s hand to be of value. In order to perform surgery within the eye, incisions have to be made – and the challenge for human and robot alike is to perform the surgery without enlarging the hole or causing additional trauma. We’ve covered the Preceyes robot in detail previously (1), but there are five important points to note about the robot that was used for the ground-breaking surgery that I witnessed that afternoon in Oxford.

First, for a surgical robot, it’s incredibly small. It fits unobtrusively on a surgical table – and this was a considerable engineering feat that has been almost a decade in the making.

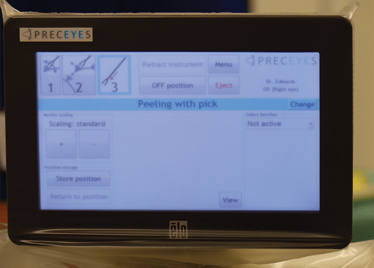

Second, it’s incredibly maneuverable: it can access everything a surgeon can, and has a very broad intraocular access. Its point of rotation is the point of entry into the eye – so there’s essentially no rotational force.

Third, the control system filters out tremor, aiding precision. The robot currently has 10 µm precision – that’s ten times better than can be achieved by hand, and something that has huge implications for the subretinal delivery of gene and stem cell therapies in the future (and also helping experienced surgeons stay in the game for longer).

Fourth, the robot has positional memory: if Robert wanted to let go of the robot arm manipulator, the instrument would stay in position in the eye. He didn’t, but if he wanted to have rested his hands during the ILM peel, he could have done so. It’s hard to overstate how much of a relief this will be for ophthalmic surgeons, who currently can’t down tools for a minute and “take a breather” during long, delicate

intraocular procedures.

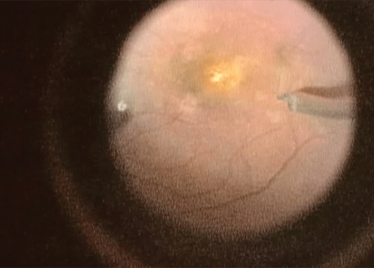

Finally, the robot has a Z-axis (depth) limit: you specify a depth and the robot will not let its arm move any further, irrespective of how hard the surgeon pulls down on the controls, which is a valuable safety feature in procedures like ILM peels, where you want to avoid touching the retina, but to peel away the ~2.5 µm thick membrane.

The ILM peel was really only the proof-of-concept. What Robert MacLaren has in mind for the robot is the subretinal application of gene and stem cell therapy. To that end, he’s currently working with NightstaRx on developing a genetic treatment for choroideremia, and on embryonic stem cells for a number of retinal diseases. But practically, both approaches require the subretinal injection of fluids precisely and at a controlled rate into a tiny hole – in a diseased and possibly friable retina. This is getting beyond the abilities of the human hand: to do this safely and consistently, you need the precision of a robot – imagine trying to find and apply a second dose through the same hole by hand. Put it another way, the whole promise of gene and stem cell therapies for the future treatment of retinal degenerative disease appears to be linked to the development of robotic eye surgery.

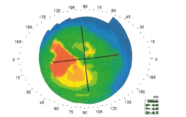

The Preceyes robot continues to be developed to encompass more techniques, like the cannulation of veins and more of the common techniques of vitreoretinal surgery. One of the biggest pushes is image integration, which will unlock considerably more of the robot’s potential. There’s already a version that includes an A-scan iOCT – the instrument can be programmed to stop 10 µm from the retina. In simulations where the robot is targeted on a sheet of paper, if you lift the paper up (simulating a patient sitting up), the robotic arm pulls directly back, maintaining the distance. The combination of robot and iOCT gives you a huge magnification of the retina, and the robot gives you incredibly discrete control of surgical instruments – completely changing the scale at which surgeons can work, and opening up a plethora of new options when it comes to retinal surgery.

Try to speculate on what life will be like for a retinal surgeon in 2026. It’s not particularly far-fetched to imagine a world where they commute in and out of work by an autonomous vehicle. They plan a patient’s surgery by exploring their retinal anatomy in 3D with a virtual reality headset, with “decision support” data being provided by virtual assistants. When it comes to the procedure, they might sit down in a control booth, directing the robotic assistant throughout the procedure, following the plan that was determined earlier. Rather than peering down a surgical microscope during the procedure, they’ll be wearing a VR headset, or gazing at a 3D flat panel display, and they’ll be able to see the procedure from multiple viewpoints with relevant (and Iron Man-esque) real-time data being overlaid onto those video feeds. Their trainees can follow the procedure in real-time, or at leisure, wherever they have a smartphone and a data connection. There will have been many important times and dates on the journey to achieve this – Arthrobot, da Vinci, the first discussions in Amsterdam of the project that ultimately formed Preceyes. But I’m certain that August 31, 2016 will be viewed a seminal date.

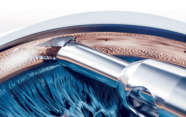

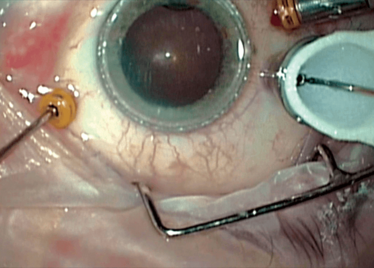

Peeling membrane from retina with the Preceyes robot.

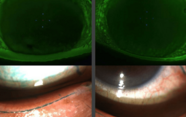

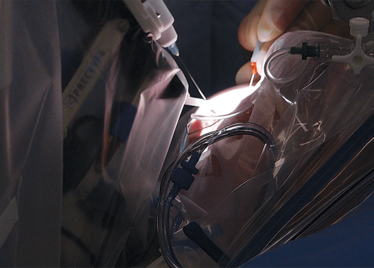

The Preceyes robot entering a patient's eye for the first time.

Robert MacLaren on Making History

- M de Smet, “Eye, Robot”, The Ophthalmologist, 15, 18–25 (2015).

I spent seven years as a medical writer, writing primary and review manuscripts, congress presentations and marketing materials for numerous – and mostly German – pharmaceutical companies. Prior to my adventures in medical communications, I was a Wellcome Trust PhD student at the University of Edinburgh.