A Fantastic Voyage – into Your Patients’ Eyes

Inexpensive yet excellent virtual reality headsets are here – and are about to profoundly transform ophthalmology.

At a Glance

- Virtual reality (VR) headsets are now mature products, and about to become consumer items

- Ophthalmology will benefit – virtual reconstructions of patients’ ocular anatomy will transform diagnosis, training, surgical planning and patient education, and remote, VR instrument training becomes a possibility

- As computer processing power increases, a future of real-time, intraoperative, 3D OCT imaging could be a reality sooner than you’d think

- Telemedicine is opened up: stereoscopic eye tests and reading desk assessments could soon be performed by patients at home, and holds great diagnostic and cost-saving potential

In 2011, Palmer Luckey was an 18-year old virtual reality (VR) gaming enthusiast. He had collected a range of VR headsets, and felt frustrated by the limitations of the hardware he had purchased over the years – so he hacked together a better VR headset in his parents’ garage… and three years later, Facebook paid US$2 billion to purchase the company he formed, Oculus VR, to develop the headset.

When Facebook pays US$2 billion for a company people pay attention, but the gaming and tech worlds were already well aware of Oculus’ VR headset, Rift. Luckey and his team had solved all of the big problems existing VR headsets had: latency, head tracking, blurred screens with fast head motions – and all without requiring expensive optics. In essence, Rift shouldn’t make you seasick when you wear it, unlike what came before. Rift was big news well before Mark Zuckerberg opened his checkbook: he viewed it as the future of how we will interact with computers.

What does this have to do with ophthalmology?

One of the most significant advances in ophthalmology has been the dramatic improvements in how we image the eye; the most recent advance with the biggest impact has been in vivo optical coherence tomography (OCT). OCT imaging of the anterior segment allows the cornea, anterior chamber, iris and angle to be viewed, but it’s OCT’s ability to image the retina and its layers, down to the choroid, that has been truly sensational. The fact is that OCT data comes with three dimensions – but because of the (currently inadequate) way we view three-dimensional (3D) data, we only ever view them on a computer screen in two. The 3D data, combined with a VR headset that makes it feasible to explore it, immediately opens up a world of possibilities of exploring the ocular anatomy – and much, much more.

The power of the triangle mesh

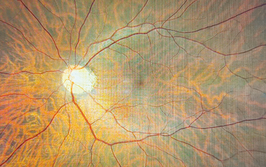

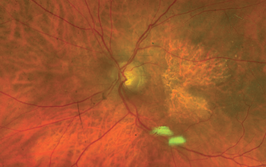

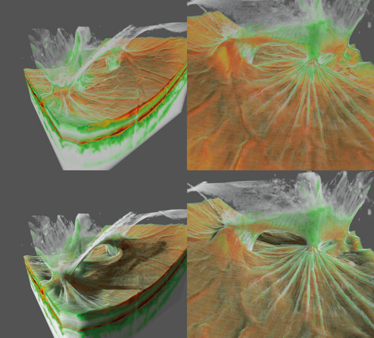

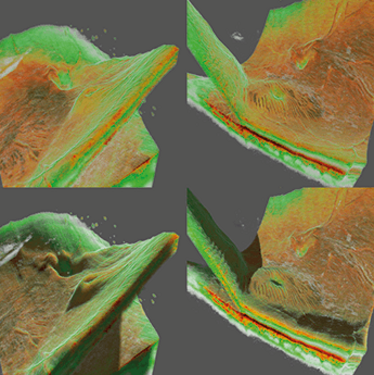

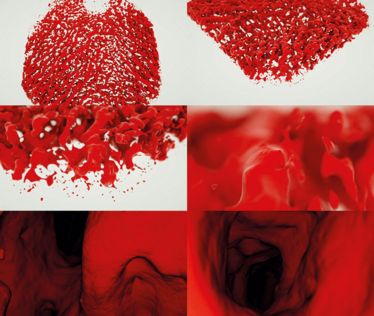

In computer modeling, there’s the concept of the voxel. Each voxel represents a value on a 3D grid, much like a pixel represents a value in a 2D grid. If we model an OCT image of the retina in three dimensions (Figure 1), we start to get an idea of structures. However, voxels literally represent only a single point on the grid – the space between them is not represented – so voxels alone can’t be used to recreate the ocular anatomy in 3D; you will only get a mist-like representation. Based on computer programs used for special effects in the movie and entertainment industry (Maxon Cinema 4D, X-particles) I’ve developed several algorithms that improve the way that we can view 3D OCT datasets. The first set of algorithms adds ray-traced shading to the voxel displays, adding a level of clarity and crispness to the visualization of subtle structures that in turn increases the amount of information that can be gleaned from the data (Figures 1 and 2). The second algorithm creates a 3D triangle mesh – much like Pixar creates in its animations – that enables you to segment entire structures and create a virtual real object (Figure 3). You can then view the anatomy, not as separate layers, but in terms of how each point in the anatomy interacts with other points (Figure 4). I can improve the shading of structures over and above what traditional OCT visualization systems can do, making it extremely easy to visualize even the most subtle of structures. This enables the viewer to fly in and out of objects without losing data integrity.

View video

Figure 1. Voxel rendering of a case of posterior uveitis. The top two images show a non-shaded voxel rendering of vitreoretinal traction after posterior uveitis. The bottom two images show the same case using a ray-traced shaded version of the same case. The ray-traced shading helps increase the contrast of subtle structures on the vitreomacular interface and helps give a better understanding of the entire three-dimensional structure of the pathology. A Topcon Atlantis DRI swept-source OCT was used to collect the data.

Figure 2. Voxel renderings of two different retinal detachments. The images on the left show a “macula off” retinal detachment in shaded and non-shaded versions. The right two images show a “macula on” retinal detachment in shaded and non-shaded modes. The left two images show that the macula is acutely threatened by detachment and needs to be treated immediately. The ray-traced shading helps illustrate exactly where the detachment is and shows the folds in the macula indicating the immediate threat. A Topcon Atlantis DRI swept-source OCT was used to collect the data.

Figure 3. Mesh visualization of a vitreomacular traction syndrome case. This group of images shows a triangle mesh visualization of a vitreomacular traction syndrome. This type of visualization creates a more solid representation of the data by first segmenting the information into triangles meshes (as seen in the bottom right corner). This information can also be used at a later stage for physical and fluid dynamic simulation. A Topcon Atlantis DRI swept-source OCT was used to collect the data.

Figure 4. Mesh visualization of a posterior uveitis. These images show the same case of vitreoretinal traction after uveitis (as seen in Figure 1). In this case they are rendered with the mesh segmentation algorithm. A Topcon Atlantis DRI swept-source OCT was used to collect the data.

The other advantage of the triangle mesh is that each triangle can have a physical property ascribed to it: elasticity, bendability, tearability and more. Although I don’t currently have access to the processing power required to do this (see, “GPUs and CUDA” below), the idea is to perform a surgical simulation in the Oculus Rift. This could be revolutionary. For example, you could plan retina surgery and see exactly where you should make an incision, or view exactly where you can lift membranes – and see the (simulated) consequences of those actions in a three-dimensional model of your patient’s eye.

.....NVIDIA GeForce GTX TITAN graphics card, which contains 5760 CUDA cores.

GPUs and CUDA: An exponential shift in computer processing power

The scientific and medical community have more to be thankful for from the videogaming community than just VR headsets. Their unquenchable thirst for ever-better graphics led to the development of the GPU: the graphics processing unit. Rather than have your phone, computer or console’s central processing unit do all of the work, GPUs take care of displaying the graphics. It’s likely that your phone, PC or Mac have CPUs with two, four, or maybe eight cores inside if you’ve paid for the best. GPUs have many thousands – and they can be used to perform more than just display smooth, high resolution videogame graphics at a super-high framerate. The advent of GPU programmability using tools like NVIDIA’s CUDA (Compute Unified Device Architecture – which comprises the graphics card platform and some extensions to programming languages to let the GPU’s power be exploited) has seen computer graphics cards evolve into powerful parallel computing tools, capable of carrying hundreds, even thousands of instructions simultaneously – which has been used to great effect in cryptography, audio-signal processing, molecular modeling, computational biology, and in this case, generating three-dimensional reconstructions of ocular anatomy from raw imaging data in minutes, rather than hours.

A better understanding of the choroidal vasculature

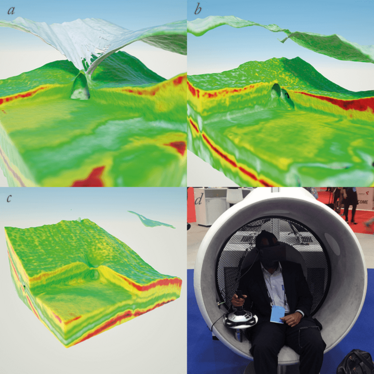

As spectral domain OCT enables imaging of the choroid – the most vascular structure in the body – this technology means that you can not only model and explore your patient’s vasculature in three dimensions (Figure 5), but also simulate your patient’s choroidal blood flow and the potential effects of vasoactive drugs on them. One example is male potency enhancers. Men of a certain age start taking them for one reason, but they then can cause several problems in the posterior segment of the eye. They immediately increase the thickness of the choroid and cases of ischemic optic nerve neuropathy (sometimes referred to as a “stroke” of the optic nerve) have been reported. In patients with central serous chorioretinopathy, increases in subretinal fluid have also been described. A better understanding of the choroidal structures with these kinds of pharmacological stimuli will help us far better understand these pathological processes. Additionally, a more complete understanding of the fluid dynamics of the choroid may help us to understand the pathological mechanisms that result in the choroidal neovascularization that occurs in wet age-related macular degeneration.

Figure 5. Mesh visualization of human choroid. These images show a triangle mesh visualization of human choroidal vasculature. The data used to produce the visualization were provided by Wolfgang Drexler’s research group, and were collected using a 1060 nm wavelength OCT. The images are taken from a flight around, then inside the vessels. The algorithm used to produce this enables the user to freely fly around inside the choroidal vessels as one would in a computer game. The longer wavelength OCT was necessary in order to penetrate deep enough under the retinal pigment epithelium in order to see the choroidal vessels.

Transforming surgical planning and improving education

There are other educational aspects too – for example, in the treatment of vitreomacular traction (VMT) with ocriplasmin. You can take a series of OCT images, process and render them in three dimensions, and follow the effects of a single injection of the enzyme over time. Figure 6 provides a good visual example. At first, the VMT is pronounced (Figure 6a), then one week after the injection the traction is released and the cystic swellings are reducing in size (Figure 6b), and by 6 months, the retina looks completely normal on OCT imaging (Figure 6c). But if you got this technology to render the 3D environment in real time –so that you and your colleagues or medical students could wander around in a virtual 3D model of the patient’s eye (Figure 6d) when the patient is still sitting there, it could tremendously aid the physician in choosing the best treatment options for the patient. It would also help the patient better understand both their disease, and why certain procedures are therefore necessary. It’s not a 3D animation of a procedure – it’s a 3D rendering of their own anatomy, and a simulation of the surgical procedures that they would be viewing.

Figure 6. Mesh visualization showing the effect of intravitreal injection of ocriplasmin. The first three images show a patient with a vitreomacular traction syndrome before and after intravitreal injection with ocriplasmin; a. shows the retina before injection; b. shows the patient one week after injection; c. shows the patient after six months – an immediate resolution of the traction and a gradual resolution of the intra retinal fluid are clearly visible; d. shows a physician interacting with this case using an Oculus Rift.

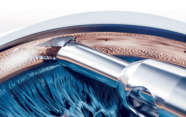

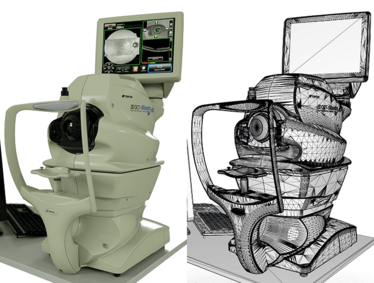

It doesn’t stop at educating trainee ophthalmologists or your patients; technical staff can benefit from a bit of education in virtual reality. Instrumentation – like the OCT device itself – often requires on-site training from technicians sent by the device’s manufacturer. But if I render the instrument, the parts the user interacts with, and model how the device operates (Figure 7), the technician can receive training on a virtual instrument – at a fraction of the cost of a visit from a representative of the manufacturer, and a training course that can be revisited at any time.

Figure 7. Photorealistic rendering of a Topcon Maestro OCT using CUDA GPU unbiased rendering (left). The CAD mesh that formed the basis of the render on can be seen on the right.

Understanding IOL “refractive surprises”

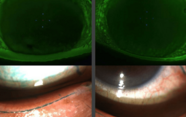

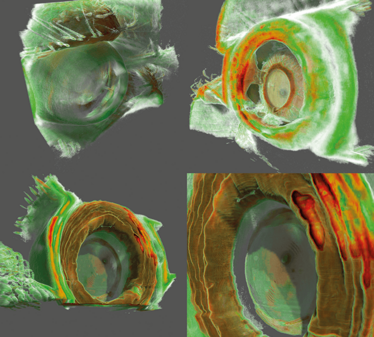

Of course, there are plenty of possibilities in the anterior chamber too; an OCT reconstruction of a patient’s eye after cataract surgery and IOL placement, in order to try and understand why the patient is having optical issues, and see if something could be done to remedy them (Figure 8). But with the addition of corneal topography and tomography data, in the future you may be able to try out various IOLs prior to surgery, and simulate the point(s) of focus. This could be particularly useful with multifocal IOLs – once you measure the size of the iris and the distance of the lens to it, you can choose the optimal premium IOL in a manner that’s likely to be far more easily explained to your patient.

Figure 8. Anterior chamber OCT. These images show that it is also possible to visualize structures of the anterior chamber using a swept source OCT (Topcon Atlantis DRI swept-source OCT). The top two images show a patient with cataract and a posterior synechia. The bottom two images show a patient with an implanted multifocal lens. The exact position of the lens in relation to the iris, and the lens’ focus rings, are clearly visible.

VR headsets as consumer products: the implications and opportunities

VR devices like Oculus Rift (see sidebar, “The Consumer VR Headset Contenders” on page 3 of this article) are likely to very rapidly become consumer technology. The Rift can currently be purchased as part of Oculus’ developer kit for US$350 (€280), and the rumored final price of the product is US$200 (€180). Samsung has also revealed the Gear VR – an immersive VR headset that uses your Samsung Galaxy smartphone as the display. That’s not as daft as it sounds – the current prototype version of Oculus Rift uses two Samsung Galaxy Note 3 displays to create its immersive 3D world, and similarly, Zeiss offers the VR ONE, which features an aperture for smartphone-specific adaptor trays, meaning that you don’t need to discard the headset when you upgrade your smartphone. Google almost certainly has the cheaper option – Google Cardboard comes at the cost of free (if you’re prepared to cut some spare cardboard out to Google’s template) or around US$15–20 (€12–16) for a pre-cut kit. Add in your smartphone, open the Android app, and explore your virtual world in all three dimensions.

The widespread availability of these internet-enabled devices raises the possibility of eye tests that can be performed wherever the patient has a device – and not just Snellen tests or Amsler grids – but stereoscopic versions of them, which might enable subtler vision defects to be detected by patients, at home, with the results sent to you almost instantaneously. Amblyopia training could be given in the form of computer games with these VR headsets – the weaker eye receives the training, the stronger eye does not.

Place this against a background of an ongoing, inexorable march of technology. It takes my current set-up about 10 minutes to preprocess a single OCT dataset in 3D. Once the pre-processing is complete, both the rendering of the data, and the users’ interactions are performed in real time. In fewer than five years’ time – and for the same cost as today – I predict that the entire process will be achievable in real time. The ability to plan and rehearse surgery on your patient’s own anatomy, and show them what you intend to do and why, is something that could profoundly change ophthalmology as we currently practice it. Outcomes should improve, things like refractive surprises may be eliminated. Patients could not be better informed about the procedures they’re about to undergo, and you couldn’t ask for a better method of learning and practicing (virtual) surgical techniques on your patient’s anatomy, before performing them for real. Don’t you wish you had this available to you when you were a medical student?

Carl Glittenberg is an ophthalmologist at the Medical Retina Unit of the Rudolf Foundation Hospital, Vienna; the vice chairman of the Karl Landsteiner Institute for Retinal Research and Imaging, and CEO of Glittenberg Medical Visualization.

The Consumer VR Headset Contenders

Oculus Rift DK2

Cost: US$200/€180 (anticipated); US$350/€280 for the Developer Kit v2

Resolution: 1280x800 – 640x800 per eye

Field of view: 100°

Weight: 0.44 kg

Release date: Developer Kit v2: July 25th 2014 Commercial version: “Soon”

Flickr/ Othree

Google Cardboard

Cost: DIY: free; kits: US$15–40 (€12–16)

Resolution: Phone-dependent

Field of view: Approximately 90°

Weight: 0.10–0.30 kg* (excluding phone)

Release date: June 26th, 2014

*Depending on the material used.

Samsung Gear VR + Galaxy Note 4

Cost: US$199/€160 (excluding cost of the phone)

Resolution: 2650x1440 – 1325x720 per eye

Field of view: 96°

Weight: 0.38 kg (excluding phone)

Release date: December 8th, 2014

ZEISS VR ONE

Cost: US €99/US$99 plus $9.99/€9.99 for phone-specific loading tray

Resolution: Phone-dependent

Field of view: Approximately 100°

Weight: 0.10–0.30 kg (excluding phone)

Release date: January 2015 (anticipated)

Carl Glittenberg is an ophthalmologist at the Medical Retina Unit of the Rudolf Foundation hospital, Vienna; the vice chairman of the Karl Landsteiner Institute for Retina Research and Imaging, and CEO of Glittenberg Medical Visualization.